Hello,

I have been working all weekend trying to create reproducible results with my back-testing. I have obtained tick by tick data from IQfeed on a single continuous futures contract and am looking to optimize a strategy around it. The problem is that I am getting different results with each run (with the same inputs). I do not have any market orders, but only stop and limit orders as entries and exits (The entries are stop orders). Because I have tick by tick data, I would not expect the results to vary at all. However, they still do. I should note, there are no NSF positions either.

Also, based on my understanding from other threads I would expect the stop order exits to be prioritized in any conflict on a single bar even without the tick by tick data. I would think this would mean that there would not be any randomization of the results. However I am getting widely varying results when using 1 minute granular processing and STILL getting varying results even with the painful tick by tick processing. Because each tick by tick run takes about five minutes instead of 1.3 seconds, I would prefer to use the pessimistic results. However, because of the random nature of the results I can not be sure about my optimization in either case.

I am also running into abug user error where if I save a strategy with the data scale option of 1 tick, I can no longer select this option under the granular processing menu. This is quite frustrating because to circumvent this problem I need to create a clone strategy and then restart wealth lab each time I wish to edit that strategy.

I have been working all weekend trying to create reproducible results with my back-testing. I have obtained tick by tick data from IQfeed on a single continuous futures contract and am looking to optimize a strategy around it. The problem is that I am getting different results with each run (with the same inputs). I do not have any market orders, but only stop and limit orders as entries and exits (The entries are stop orders). Because I have tick by tick data, I would not expect the results to vary at all. However, they still do. I should note, there are no NSF positions either.

Also, based on my understanding from other threads I would expect the stop order exits to be prioritized in any conflict on a single bar even without the tick by tick data. I would think this would mean that there would not be any randomization of the results. However I am getting widely varying results when using 1 minute granular processing and STILL getting varying results even with the painful tick by tick processing. Because each tick by tick run takes about five minutes instead of 1.3 seconds, I would prefer to use the pessimistic results. However, because of the random nature of the results I can not be sure about my optimization in either case.

I am also running into a

Rename

Obviously I can't explain what you're seeing because I don't know any details of the backtest - not even the scale you're running it in (I assume Daily because you you're using granular processing). But let's start with, why would you need to do granular processing to backtest a single contract?

QUOTE:Come again? You're running a backtest in a 1 tick scale and looking for more granular processing? I'm really confused.

I am also running into a bug where if I save a strategy with the data scale option of 1 tick, I can no longer select this option under the granular processing menu.

Thank you for your reply.

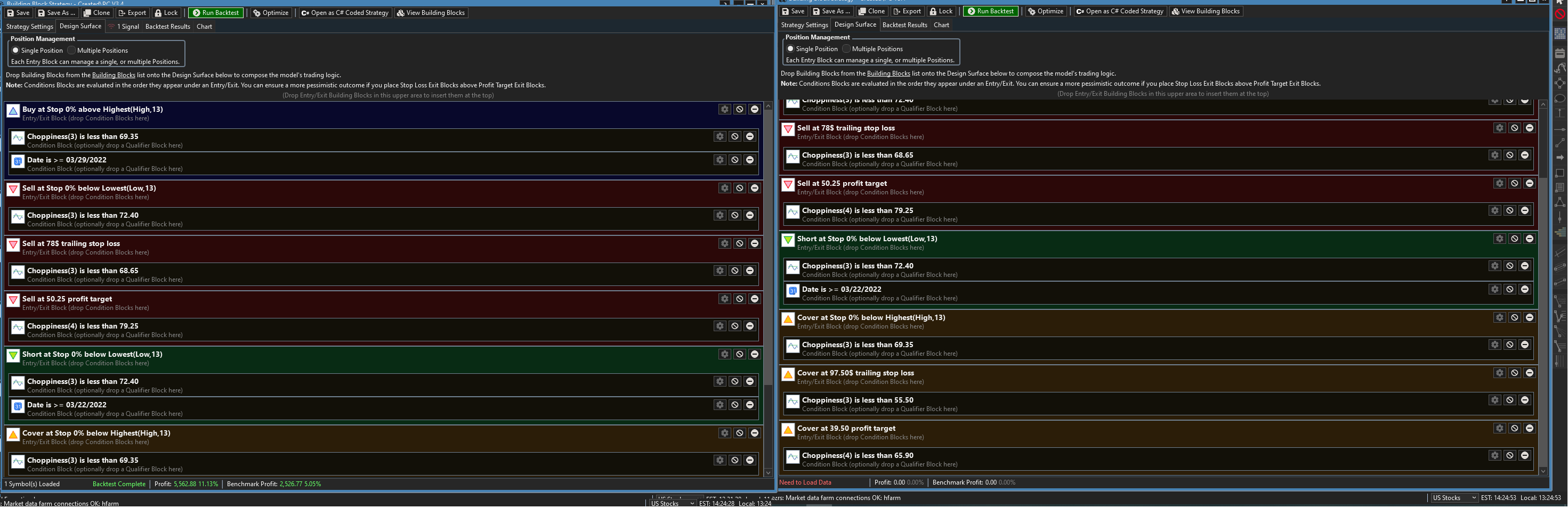

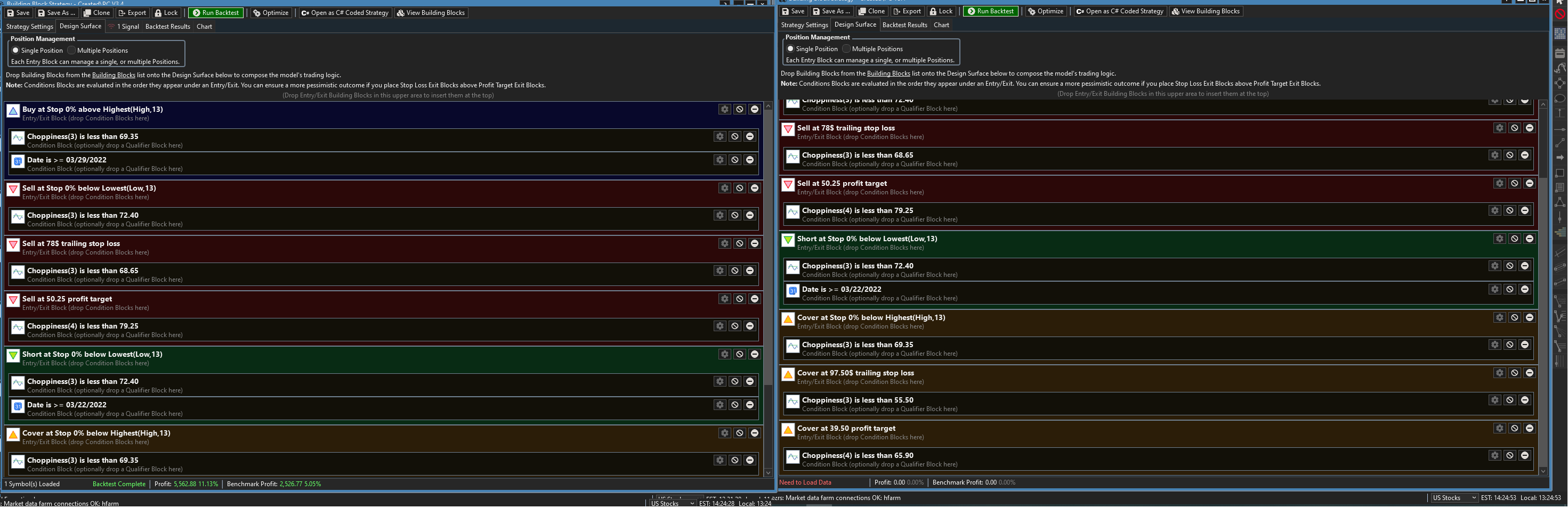

The strategy operates on the 10 minute timeframe. It is a block strategy that follows the trend and utilizes trailing stops and profit target limit orders. I also use the Choppiness Index to avoid taking some entries and exits. I have attached a screenshot of the strategy.

I am using granular processing for a couple of reasons. First, I began using it to get more accurate backtesting results, as often a bar will touch both the profit taking limit order and the stop loss order in one bar. I am under the understanding that granular processing will allow WL to determine which was touched first. Further, I understand that if WL cannot determine which was touched first it will use the more pessimistic result.

However, I am obtaining different results upon each backtest (with the same settings). I cannot determine why this is happening, as you say it is only one contract. The data is not changing, and the input is not changing. This past weekend I found that it even happens when I process a tick by tick granular processing! So I am trying to solve this because it is preventing me from being confident in my optimizations.

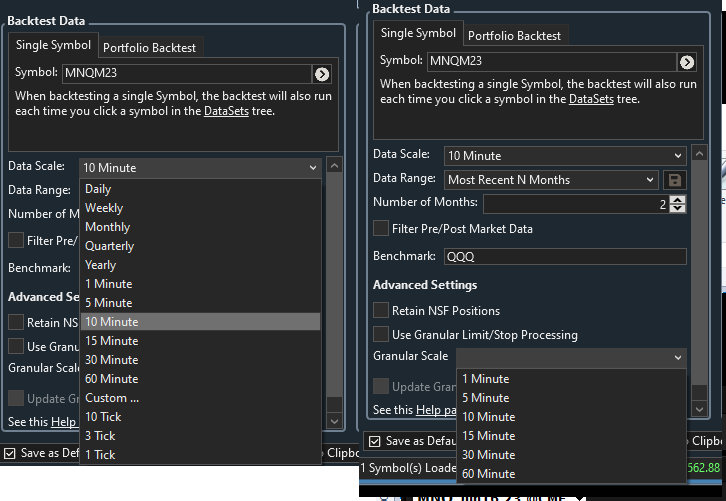

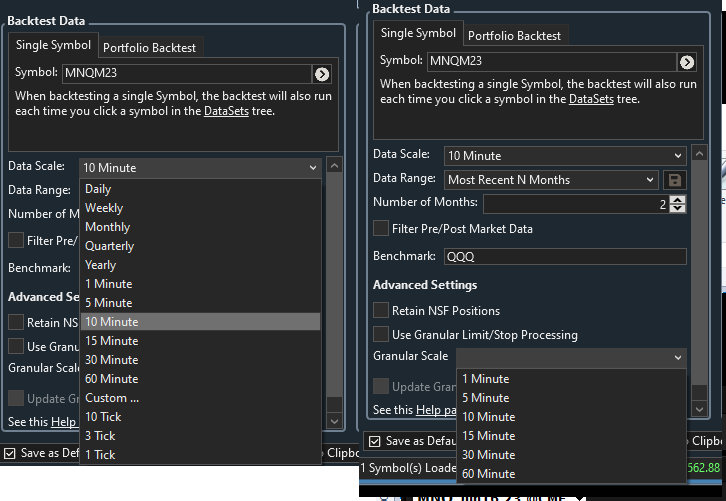

The bug I mentioned is regarding the two menu options on the “Strategy Settings” page. In order to utilize tick by tick data, I must use the custom option under the “data scale” page. However, once I create the 1 tick option and save the strategy, the 1 tick option will no longer be available under the “granular scale” menu the next time I open the strategy. This persists even with a restart of WL and the only workaround I have found is cloning the strategy and restarting WL. However if I save this clone after using the 1 tick setting then the problem will occur again and I will have to create yet another clone. I have attached a screenshot of what I am talking about.

The strategy operates on the 10 minute timeframe. It is a block strategy that follows the trend and utilizes trailing stops and profit target limit orders. I also use the Choppiness Index to avoid taking some entries and exits. I have attached a screenshot of the strategy.

I am using granular processing for a couple of reasons. First, I began using it to get more accurate backtesting results, as often a bar will touch both the profit taking limit order and the stop loss order in one bar. I am under the understanding that granular processing will allow WL to determine which was touched first. Further, I understand that if WL cannot determine which was touched first it will use the more pessimistic result.

However, I am obtaining different results upon each backtest (with the same settings). I cannot determine why this is happening, as you say it is only one contract. The data is not changing, and the input is not changing. This past weekend I found that it even happens when I process a tick by tick granular processing! So I am trying to solve this because it is preventing me from being confident in my optimizations.

The bug I mentioned is regarding the two menu options on the “Strategy Settings” page. In order to utilize tick by tick data, I must use the custom option under the “data scale” page. However, once I create the 1 tick option and save the strategy, the 1 tick option will no longer be available under the “granular scale” menu the next time I open the strategy. This persists even with a restart of WL and the only workaround I have found is cloning the strategy and restarting WL. However if I save this clone after using the 1 tick setting then the problem will occur again and I will have to create yet another clone. I have attached a screenshot of what I am talking about.

The reason I assumed a Daily backtest was because my working knowledge of granular was that it was targeted for Daily+. The User Guide states, "While this feature would generally be used while backtesting Daily and higher timeframes. You can use it for large intraday scales too - 30-minute or hourly bars for example."

However, I just tested it with 30-min bars and granular processing is not used even at the 30-minute scale.

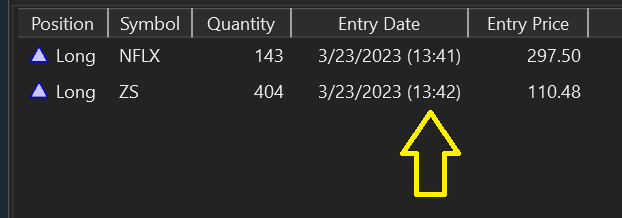

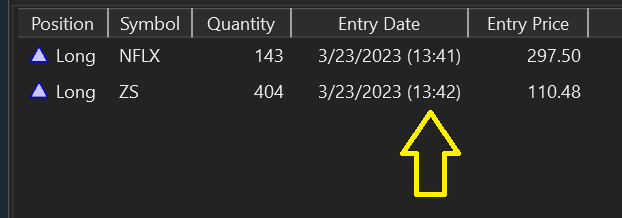

You'll know if you get granular trades by inspecting the Position list and you see Time of Day timestamps in parentheses. Here's a Daily backtest with the granular TODs -

The fact that multiple trades can occur intra-bar is the reason you're getting random results - that's by design.

If your strategy is already set up for intraday, probably it's just best to modify it where required to trade at the more granular timeframe. Otherwise, accept the variability that you're likely to get trading live anyway - and turn on "Live Positions" to stay sync'd.

However, I just tested it with 30-min bars and granular processing is not used even at the 30-minute scale.

You'll know if you get granular trades by inspecting the Position list and you see Time of Day timestamps in parentheses. Here's a Daily backtest with the granular TODs -

The fact that multiple trades can occur intra-bar is the reason you're getting random results - that's by design.

If your strategy is already set up for intraday, probably it's just best to modify it where required to trade at the more granular timeframe. Otherwise, accept the variability that you're likely to get trading live anyway - and turn on "Live Positions" to stay sync'd.

So are you saying that granular processing has no effect at the 30 minute level, and probably not at any timeframe below that?

I was under the impression that WL would choose the most pessimistic outcome, as is described under the block design surface window. Instead you are saying that WL will choose the outcome randomly? If this is the case, maybe a setting regarding this behavior might be a good feature request, because I am having a hard time wrapping my head around how to optimize a strategy with random outcomes.

I will have to try the lower timeframe idea. Unfortunately my early tests of that have shown worse results for this strategy for whatever reason. 10 minute bars seems to be a sweet spot, but maybe those results are not as reliable as using a smaller timeframe.

Is WL designed more for interday trading instead of intraday trading? Perhaps I am approaching strategy design with WL and intraday trading incorrectly.

I was under the impression that WL would choose the most pessimistic outcome, as is described under the block design surface window. Instead you are saying that WL will choose the outcome randomly? If this is the case, maybe a setting regarding this behavior might be a good feature request, because I am having a hard time wrapping my head around how to optimize a strategy with random outcomes.

I will have to try the lower timeframe idea. Unfortunately my early tests of that have shown worse results for this strategy for whatever reason. 10 minute bars seems to be a sweet spot, but maybe those results are not as reliable as using a smaller timeframe.

Is WL designed more for interday trading instead of intraday trading? Perhaps I am approaching strategy design with WL and intraday trading incorrectly.

QUOTE:I said that, tested it, and wrote it above.

So are you saying

QUOTE:Thanks for pointing that out. It was this way until recent builds improved the backtester. We'll update that. Keep reading...

I was under the impression that WL would choose the most pessimistic outcome, as is described under the block design surface window.

QUOTE:

Instead you are saying that WL will choose the outcome randomly?

If it's clear that either a stop OR a limit would fill, then that order is filled. In cases that can't be resolved in which it's possible that both a stop and limit order could fill on the same bar, the outcome is random if you don't apply a transaction weight. You can guarantee a more pessimistic outcome if you apply a higher transaction weight to stop loss orders.

Example, assigning the "High" indicator for Transaction.Weight to stop loss orders and the "Low" to limit profit targets will ensure that stop losses fill over profit targets if they both could have been filled on the same bar.

QUOTE:A bar is a bar. I hope that answers it. (The difference is what can occur within one bar.)

Is WL designed more for interday trading instead of intraday trading?

Ah yes transaction weight. I began learning about using that condition but when I read the help file and watched the videos they said transaction weight should only be used for market orders and that I instead should be focused on using granular processing. I am happy to hear I can also use them to adjust this random behavior into something more consistent. Thank you for these ideas and solutions. I look forward to testing them out.

I ask if WL is more designed for interday trading because it appears some of these features and descriptions do not work for intraday trading, i.e. granular processing and its description in the help file. I am just trying to make sure I'm not barking up the wrong tree trying to make WL work for this particular intraday strategy I am working on.

I ask if WL is more designed for interday trading because it appears some of these features and descriptions do not work for intraday trading, i.e. granular processing and its description in the help file. I am just trying to make sure I'm not barking up the wrong tree trying to make WL work for this particular intraday strategy I am working on.

As we've indicated in the documentation, granular processing was designed to solve the priority problem for End-of-Day trading. A lot of a trading happens in one day. A lot less happens in 10 minutes.

We can make that work for shorter intervals, but you are the only user that I'm aware of that has tried to apply this in an intraday context. It doesn't even make sense to me why you wouldn't just trade at a more granular base scale. The strategy is already set up for intraday, so why request 2 series? Just one would result in a quicker backtest and use less memory.

We can make that work for shorter intervals, but you are the only user that I'm aware of that has tried to apply this in an intraday context. It doesn't even make sense to me why you wouldn't just trade at a more granular base scale. The strategy is already set up for intraday, so why request 2 series? Just one would result in a quicker backtest and use less memory.

I wouldn't expect you to implement it just for me. With this strategy I need to run more tests but it appears that even when using the same timeframes (like 10 1 minute bars for example), the 10 minute timeframe works best.

However my primary concern is figuring out how to get consistent backtesting results using the transaction weights or any other method. The fact that my results vary by over 20% with each run is very disconcerting. That was the primary purpose for me to attempt to use the granular processing.

However my primary concern is figuring out how to get consistent backtesting results using the transaction weights or any other method. The fact that my results vary by over 20% with each run is very disconcerting. That was the primary purpose for me to attempt to use the granular processing.

QUOTE:Understood. My point is that if you run the strategy in the more-granular scale, you'll achieve the same result.

That was the primary purpose for me to attempt to use the granular processing.

You can use ScaleInd indicator to re-scale indicators from say, 1-Minute to 10-Minutes and use the result to signal trades. Signals will change every 10 minutes but the stop and limit orders will be looking for fills every minute. This is more realistic because in real life the outcome isn't always the most pessimistic - at least I'd like to think so!

Very nice that's a great suggestion. Thank you!

Your Response

Post

Edit Post

Login is required