There is this wonderful result visualizer "Position Metrics"

I have some suggestions to make it even more useful.

Insample / Out-of-Sample

I'd love to see the results for two distinct backtest intervals: Insample and Out-of-Sample. Probably with different colors:

red/green for Insample,

orange/blue for Out-of-sample.

This makes the interpretation of the results much more useful and robust.

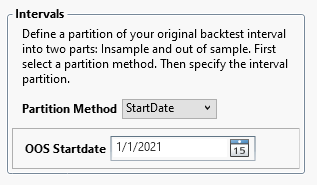

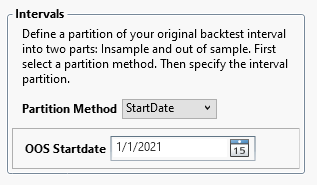

This requires settings for these intervals, which could be borrowed form the IS/OS Scorecard:

I have some suggestions to make it even more useful.

Insample / Out-of-Sample

I'd love to see the results for two distinct backtest intervals: Insample and Out-of-Sample. Probably with different colors:

red/green for Insample,

orange/blue for Out-of-sample.

This makes the interpretation of the results much more useful and robust.

This requires settings for these intervals, which could be borrowed form the IS/OS Scorecard:

Rename

QUOTE:

Insample / Out-of-Sample

Either I don't understand your idea or it doesn't make great sense in this context. You're suggesting that this particular visualizer should start behaving like a WFO optimization, in fact performing two backtest runs: for IS and OOS. But IS / OOS is not something inherent or specific to the Position Metrics. Why this and not the others?

QUOTE:

Why this and not the others?

In fact this is the start of quite some more suggestions:

Use IS/OS metrics throughout WL8 - everywhere!

It is not necessary to run two separate backtests.

Just run a single backtest.

Then calculate and collect all results and metrics for the two parts of this backtest interval. First part: Insample, second part: Out-of-sample.

This is how the IS/OS scorecard works. It is quite helpful to avoid over-optimization during manual improvements.

QUOTE:

... calculate ... results and metrics for ... two parts of this backtest .... First part: In-sample, second part: Out-of-sample. It is ... helpful to avoid over-optimization ...

This is about an optimization end test.

What he's really asking is to "contrast" the In-sample case against the Out-of-sample case to see how they are different. By "contrast" I mean take the difference of their performance slopes. (I do not mean "contrast" in the statistical sense where we are taking the ratio of variances and performing an F-test for significance. Sorry about that confusion.)

I suppose a statistical contrast could also be done. That would please research types for publication, but "most" WL users aren't researchers.

---

I think the end test for the optimizer is something the optimizer should do. But I also think performing a "formal" F-test as part of an optimizer end test is over kill. I've only seen that done in stepwise linear regression algorithms to steer the model permutation stepping automatically.

I know this has been mentioned before by Dr Koch but I think an area of development generically for WL would be tests that help determine if the strategy is curve fitted or not. Many of these things can be done manually, but it would ideally be nice to have these processes streamlined.

Along those lines some approaches that I have seen are:

- defining In-Sample vs Out of Sample data segments

- looking at ratios of OOS/IS metrics

- parameter stability

- randomizing the bar data

Along those lines some approaches that I have seen are:

- defining In-Sample vs Out of Sample data segments

- looking at ratios of OOS/IS metrics

- parameter stability

- randomizing the bar data

This feature request is in my opinion the most important for developing robust strategies.

I don't use a simple in-sample / out-of-sample period. I segment the first 70% of the data into 7-15 IS/OOS periods to ensure that my IS and OOS covers every regime (high vol / low vol/ bull / bear). This identifies potential candidates.

I then have a validation period that is the next 20% that I run my robustness test on (including the original 70%). This eliminates the majority of the candidates (about 80-90%)

My last 10% of unseen data is a final validation period.

If you guys decide to implement this feature, it would be great to get everyone's thoughts on how they approach this.

I don't use a simple in-sample / out-of-sample period. I segment the first 70% of the data into 7-15 IS/OOS periods to ensure that my IS and OOS covers every regime (high vol / low vol/ bull / bear). This identifies potential candidates.

I then have a validation period that is the next 20% that I run my robustness test on (including the original 70%). This eliminates the majority of the candidates (about 80-90%)

My last 10% of unseen data is a final validation period.

If you guys decide to implement this feature, it would be great to get everyone's thoughts on how they approach this.

QUOTE:

This feature request is in my opinion the most important

Yes!

We decided to decline this because WL doesn't break down a backtest into in-sample and out-of-sample periods, so it would require too much of an architectural change to get the Visualizers to support this. You can always run two backtests in two date ranges side by side and compare the results in any Visualizer.

Hi Guys,

I was going to code this up myself. This was one of the underlying reason I wanted to be able run backtests and optimizations through code. The problem I ran into was that you can't change the parameter settings of the strategy through code given the current implementation.

If you create a LoadStrategy method and cange StrategyRunner so that it accepts a reference to the strategy instead of a string of the name of the strategy it allows the user to manipulate the strategy and parameters:

Load and Save a Strategy

UserStrategyBase strategy = LoadStrategy("name");

strategy.SaveToFile("name");

Run a Single Backtest

StrategyRunner sr = new StrategyRunner();

Backtester bt = sr.RunBacktest(strategy);

Run a strategy batch (run in parallel)

List<Backtester> bts = sr.RunBacktest(List<strategy> strategies);

This would allow the user to full flexibility of create almost anything. I wanted to create my own genetic strategy generator and automate my entire workflow for robustness, forward testing, etc.

I was going to code this up myself. This was one of the underlying reason I wanted to be able run backtests and optimizations through code. The problem I ran into was that you can't change the parameter settings of the strategy through code given the current implementation.

If you create a LoadStrategy method and cange StrategyRunner so that it accepts a reference to the strategy instead of a string of the name of the strategy it allows the user to manipulate the strategy and parameters:

Load and Save a Strategy

UserStrategyBase strategy = LoadStrategy("name");

strategy.SaveToFile("name");

Run a Single Backtest

StrategyRunner sr = new StrategyRunner();

Backtester bt = sr.RunBacktest(strategy);

Run a strategy batch (run in parallel)

List<Backtester> bts = sr.RunBacktest(List<strategy> strategies);

This would allow the user to full flexibility of create almost anything. I wanted to create my own genetic strategy generator and automate my entire workflow for robustness, forward testing, etc.

StrategyRunner has an overload of the RunBacktest that accept a Strategy instance,

public Backtester RunBacktest(StrategyBase sb, Strategy s = null, StrategyExecutionMode em = StrategyExecutionMode.Strategy)

public Backtester RunBacktest(StrategyBase sb, Strategy s = null, StrategyExecutionMode em = StrategyExecutionMode.Strategy)

That's perfect. Thank you. I will try to implement my ideas and see where I get to.

Your Response

Post

Edit Post

Login is required